Artificial intelligence (AI) systems simulate human intelligence by learning, reasoning, and self correction. The technology is promising: it could reduce patient waiting times, improve diagnosis, and relieve an overburdened NHS - benefits which apply across the field, regardless of race.

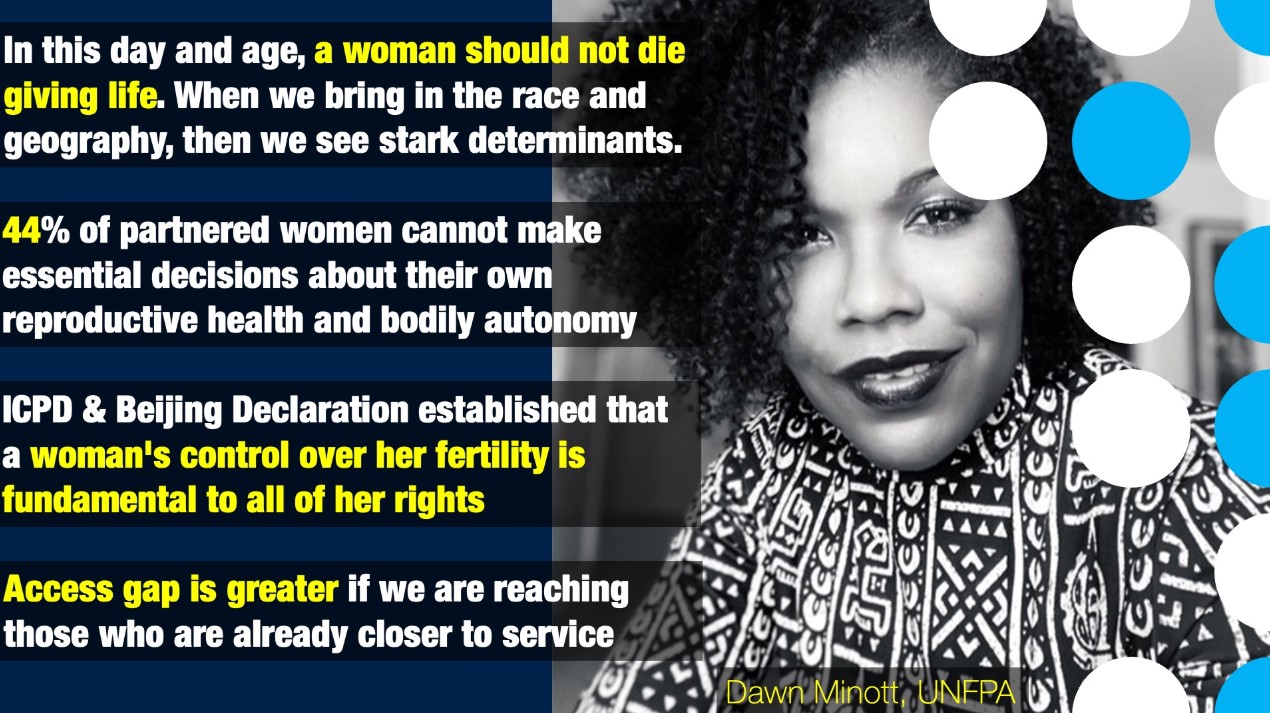

But there is growing concern that if the data being used to train AI are not ethnically representative, the technology may not work as well for some groups.

For example, images used to train algorithms to detect melanoma are predominantly, if not all, images of caucasian skin. In reality that could be the difference between AI that works as well as the best-trained clinicians for a white person, but doesn’t recognise cancerous moles on a black person.

What’s more, if companies are not obliged to show this data, people may continue to think that AI is relevant to everyone when it is not.

Professor Adewole Adamson at the University of Texas says it is fundamental to AI’s success that products work on all regardless of skin colour, and that where they do not, a warning is provided.Eleonora Harwich from the think tank Reform warns that bias and discrimination are difficult things to regulate, and suggests that market incentives could provide more representative data.

But others argue that market incentives can’t overcome the fact that there are hundreds of years of medical research missing for people from ethnic minority backgrounds.According to Adamson, the cost of not fixing this could be huge. AI could misdiagnose people, under diagnose people, and potentially increase health-care disparities, he warns.

For that reason, he says we need to keep in mind that AI might be an amazing tool, but it is no replacement for the complex human thinking required of ethical decision making.

The images used to train algorithms to detect melanoma are predominantly, if not all, images of caucasian skin. In reality that could be the difference between AI that works as well as the best-trained clinicians for a white person, but doesn’t recognise cancerous moles on a black person.

The images used to train algorithms to detect melanoma are predominantly, if not all, images of caucasian skin. In reality that could be the difference between AI that works as well as the best-trained clinicians for a white person, but doesn’t recognise cancerous moles on a black person.

.png)

.png)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)